Introduction

The constant evolution of earth observation missions has allowed the acquisition of a large amount of satellite data. They have considerably changed the way humanity manages its territory. One of the major examples is the Copernicus program developed by the European Space Agency (ESA) which consists in the deployment of several constellations of satellites to monitor the Earth Surface. This is the case with the Sentinel missions, which currently consists of six missions designed as a two-satellite constellation. Sentinel-1 and Sentinel-2 provide open-access and freely Synthetic Aperture Radar (SAR) and multispectral imagery with a very short revisit time, respectively 5 days for Sentinel-2A/2B and between 5 and 10 days depending on the location on Earth for Sentinel-1A/1B.

The high revisit period of these two sensors allows the use of time series to study the dynamics and evolution of processes and objects of interest. Satellite Image Time Series (SITS) have already been used for the analysis of agricultural areas [1] [2] [3] [4], forest areas [5] [6] or classification of Land Use/Land Cover (LULC) [7]. In addition, the joint use of SAR and optical data continues to increase the interest of researchers, especially in the case of LULC mapping [8] [9]. This combination has already shown its performance in many other works such as the detection of natural areas [10] [11], the detection of changes [12] or the mapping of urban areas [13].

To deal with this increasing amount of data, new techniques based on neural networks have been developed and offer promising results in the classification of LULC from multiple data sources [14]. Multimodal and multitemporal datasets are currently quite rare and remain for the most part specific to applications (Object detection, Scene classification, Semantic segmentation or Instance segmentation) and it is important to have a variety of data sets for the application of deep learning models. To our knowledge, only two datasets use Sentinel-1/Sentinel-2 pairs for scene classification or semantic segmentation, BigEarthNet [15] and SEN12MS [16]. BigEarthNet proposes 590,326 pairs of single-time annotated images with the Corine Land Cover reference data over 10 countries of Europe. SEN12MS offers 180,662 Sentinel-1 and 2 triplets and MODIS Land Cover over several regions in the world and for spring, summer and winter seasons to perform semantic segmentation.

In order to support the lack of multimodal and multitemporal datasets, we decided to produce MultiSenGE, a benchmark dataset covering the Grand-Est region in France (57,433 km^2 which represents 10.6% of the French territory). The objective is to focus the benchmark dataset on semantic segmentation and classification. This dataset offers Sentinel-1, Sentinel-2 and LULC triplets, a land cover data recently available with an open-source licence over this territory. Compared to other existing datasets, MultiSenGE allows to classify urban surfaces into 5 LULC classes, against only 1 for SEN12MS using MODIS Land Cover as reference data and 11 for BigEarthNet using CORINE Land Cover as reference data. We use OCSGE2-GEOGRANDEST which have the advantage to own a Minimum Mapping Unit (MMU) less than 50m^2, which gives it a higher geometric acuracy than existing LULC products and is close and consistent with the spatial resolution of Sentinel imagery (10m).

First is presented the satellite and LULC data on the study area used to build the dataset. Then the methodology to process the reference data and to create triplets of patches is presented. Finally, baseline results performed on the beta version of MultiSenGE only based on urban thematic classes are described before conclusion and perspectives.

Reference data and satellite imagery

Study site and reference data

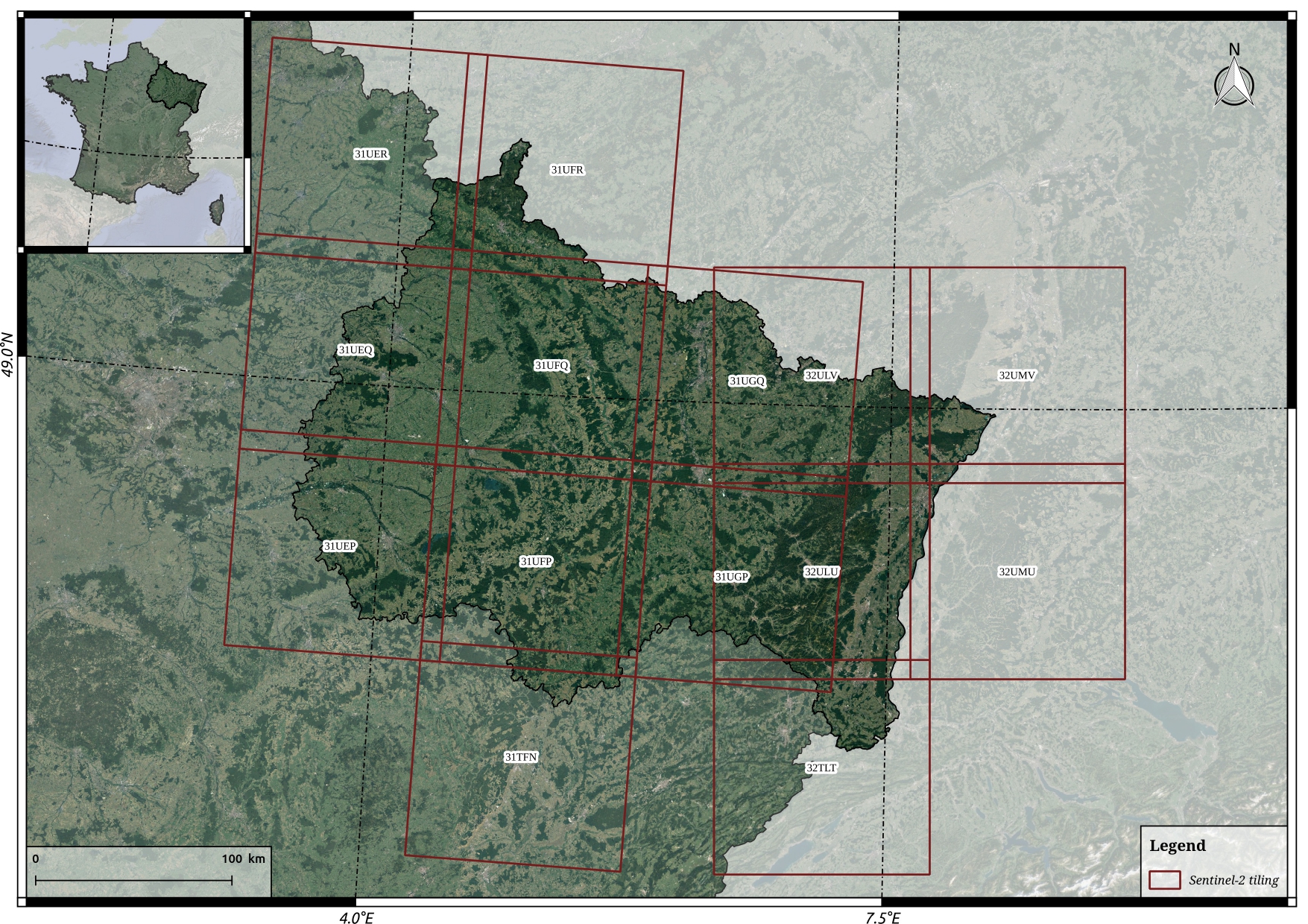

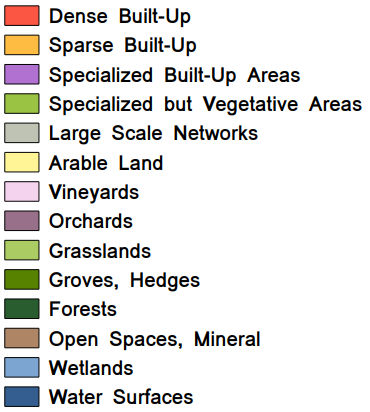

The dataset covers a large territory (57,433 km^2) in eastern France and corresponds to an administrative French district (Figure ) extended from Alsace in the East to the Ardennes and Marne in the West. This area have been chosen due to the availibility of a new, accurate and up-to-date vector LULC database named OCSGE2-GEOGRANDEST (www.geograndest.fr). This open-access vector database was built by visual interpretation of aerial photographs for 2019/2020. It is organized into four levels of nomenclature where the first level categorizes land cover into four classes (1) artificial surfaces, (2) agricultural areas, (3) forest areas, and (4) water surfaces. At the most accurate level (1:10,000), 53 LULC classes map the region and the size of the smallest elements is 50m^2. In order to obtain a generic reference data with 14 classes and have class consistency at 10m spatial resolution, several preprocessing steps are performed on the original topographic vector database (see 3.1). In the OCSGE2 layer, all the roads have the same degree of importance. Some of them are too small to be distinguished at 10 m spatial resolution. Then, in order to produce an adapted road network label, a second database (BDTOPO-IGN), produced by IGN describing lines in vector format, with the degree of importance, is pre-processed.

Sentinel-2

Sentinel-2 offers 13 spectral bands at 10m and 20m spatial resolution. The Satellite Image Time Series (SITS) for 2020 (14 tiles - Figure 2) are downloaded from the Theia land services and datacenter (https://www.theia-land.fr/), in L2A format, corrected from atmospheric effects with a cloud mask (surface reflectance product). Only free cloud cover images (with cloud cover strictly less than 10%) are automatically downloaded by querying their database using the script theia_download [17]. For the MultiSenGE dataset the 10 spectral bands at 10 meters (B2, B3, B4, B8) and 20 meters (B5, B6, B7, B8A, B11 and B12) spatial resolution are used.

Sentinel-1

Sentinel-1 is equipped with C-band SAR sensors wich allows the acquisition of imagery day and night without weather disturbances compared to optical imagery. It provides data in dual polarization with two product types : Grand Range Detected (GRD) and Single Look Complex (SLC) [18]. GRD products, used to construct this dataset, consist on SAR data that have been multi-looked and projected to ground range using Earth ellipsoid model.

The SAR images available in ascending and descending orbits for 2020 were downloaded and pre-processed using the S1-Tiling [19] processing chain developed by CNES (Centre National d’Etudes Spatiales). This processing chain can be divided into four points : (1) automatic downloading of Sentinel-1 data thanks to the EODAG library which offers the possibility to request different servers to always have data available on the studied area, (2) slicing of the SAR data according to the Sentinel-2 tiling, (3) orthorectification of the newly sliced SAR scenes and (4) application of a multi-temporal filter to reduce the speckle and preserve the spatial information.

MultiSenGE production

Reference data processing

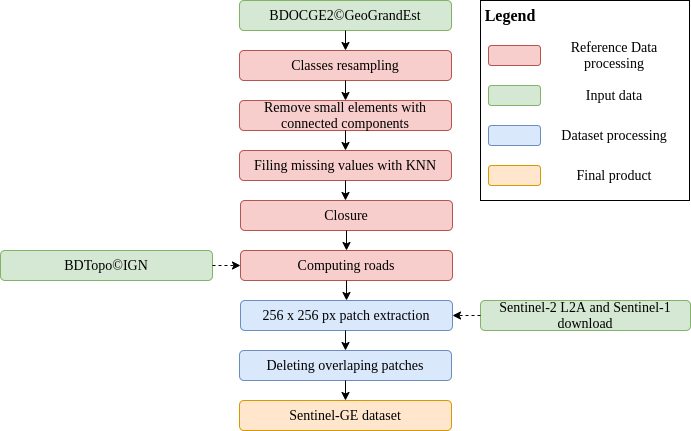

In order to obtain LULC data at 10 meters spatial resolution, five pre-processing steps are applied on the reference data (Figure 2). The five steps consists in (1) resampling reference labeled data, (2) removing the smallest polygons thanks to the connected component labeling method applied on each selected class, (3) filling the holes resulting from this method by nearest neighbor, (4) applying a mathematical morphology of closure to smooth the outlines of each class on the final data and (5) adding roads from the second database.

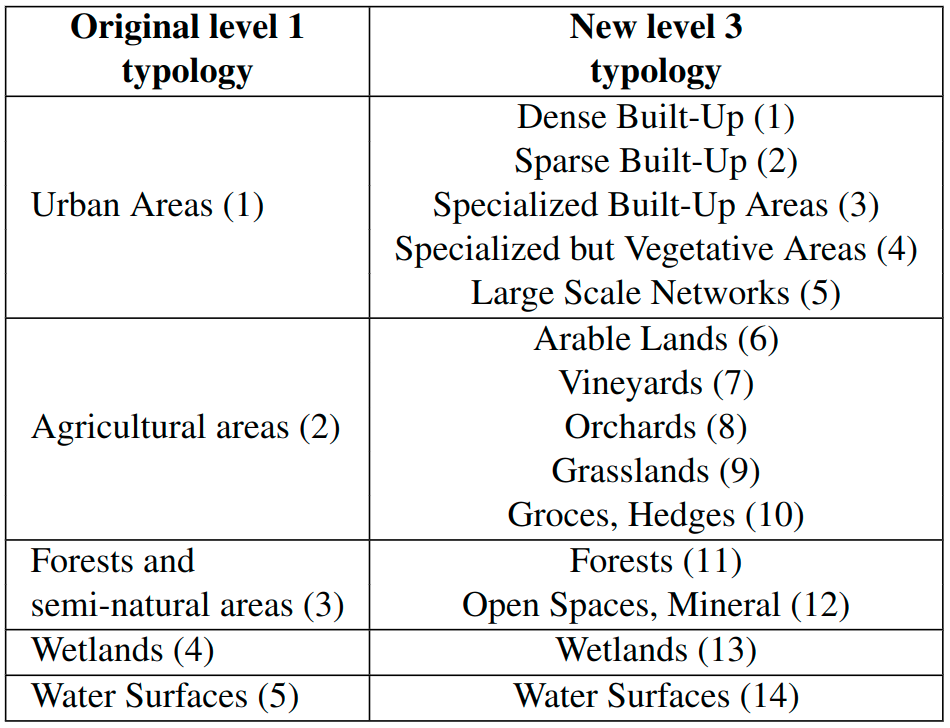

First, to obtain a number of labeled classes adapted to the spatial resolution, we kept the classes at level 3 of the nomenclature with some semantic reclassification to reduce the complexity of the typology, especially for semantic segmentation applications. Finally, the final LULC typology includes 14 classes with 5 classes for urban areas and 9 classes for natural surfaces (Table 1).

The second step is applied to reduce the spatial complexity of the reference data which can contain small polygons (50m^2) that will add noise to the database and are not visible at 10 m. This important step is based on a connected components method to extract the smallest polygons and then sort them according to their area. All polygons smaller than 2.5 ha (total surface of 250 pixels) for each class are left blank, which is more accurate than the MMU of some existing LULC products such as Corine Land Cover (https://land.copernicus.eu/pan-european/corine-land-cover). This value is an empirical choice after several tests. In the third step, a nearest neighbor method is applied to fill the holes. It consists in finding, using Euclidean distance and the nearest neighbors, the value of the missing data by calculating the mean of their value [20]. A higher weight is assigned to the nearest neighbors of the missing data. A closure with a rectangle morphological object is then applied in the four step to smooth the new level 3 reference data (Figure 3). Finally, large Scale Networks are added in post-processing and come from OCSGE2-GEOGRANDEST for the railway and BDTOPO-IGN for the most important road networks. A buffer of 30 meters is applied for highways and 10 meters for the second most important roads or railways, which is consistent with the 10 m spatial resolution. After merging all the vector data in a unique vetor layer, all polygons are rasterized at 10 m spatial resolution.

Triplet data preparation

The Sentinel-1 SAR SITS, Sentinel-2 optical SITS and the reference data are cut into 256 x 256 pixel patches. The VV and VH bands from the pre-processed Sentinel-1 images are stacked for each patch. For Sentinel-2, the bands at 10 meters are kept and the bands at 20 meters are resampled to 10 meters spatial resolution using cubic interpolation to have homogeneity in the final data. The 10 bands are then stacked for each patch. Finally, the simplified LULC reference data is also cut following the same footprint to build triplets with dual-pol Sentinel-1 image patches and multispectral Sentinel-2 image patches. This results in triplets containing the reference data, the Sentinel-2 time series ( number of dates for each patch) and the Sentinel-1 time series (m number of dates for each patch). In a post-processing step, overlapping patches are removed to obtain a spatial independence of all patches contained in the dataset. This overlap region mainly concerns the 10km supperposition area between adjacent tiles.

Structure of the benchmark dataset

Each triplet is described by labels to perform both scene classification and semantic segmentation (Figure 5). In addition to the classes, this GeoJSON file also contains the names of all associated Sentinel-2 and Sentinel-1 patches as well as the specific projection for each patch. The projection of each patch is in UTM/WGS84 format inherited from its original tile. For the current version, the dataset contains 8,157 non-overlapping triplets along with the GeoJSON file containing all the classes present in the patch. The mosaic level 3 reference data product and its typology (Figure 4) will also be available for download.

MultiSenGE contains four folders with (1) the simplified reference data patches, (2) the Sentinel-2 patches, (3) the Sentinel-1 patches and (4) the files. The files contained in the folders are identified according to the following nomenclatures:

- ground_reference : {tile}GR{x-pixel-coordinate}_{y-pixel-coordinate}.tif

- s2 : {tile}{date}S2{x-pixel-coordinate}{y-pixel-coordinate}.tif

- s1 : {tile}{date}S1{x-pixel-coordinate}{y-pixel-coordinate}.tif

- labels : {tile}{x-pixel-coordinate}{y-pixel-coordinate}.json

where tile is the Sentinel-2 tile number, x-pixel-coordinate and y-pixel-coordinate are the coordinates of the patch in the tile and date is the date of acquisition of the patch image as the time series is extracted from each sensor. GR means Ground Reference and correspond to the ground reference patches, S2 Sentinel-2 and correspond to the Sentinel-2 patches and S1 Sentinel-1 for the Sentinel-1 patches. Users will find 72,033 multi-temporal patches for Sentinel-2 and 1,012,227 multi-temporal patches for Sentinel-1. To facilitate the reading and extraction of dates from the dataset, tools have been developed and are available on a code hosting service.

Baseline results

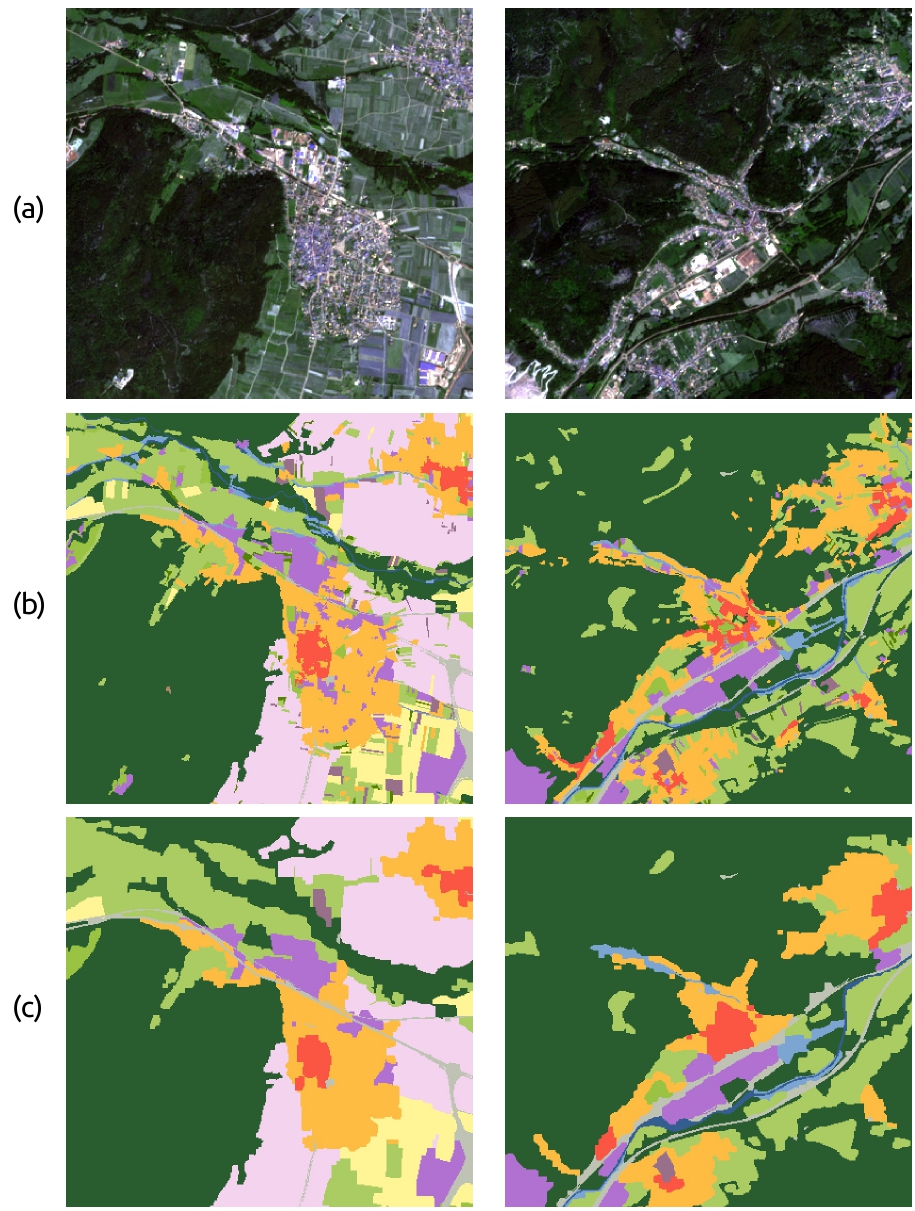

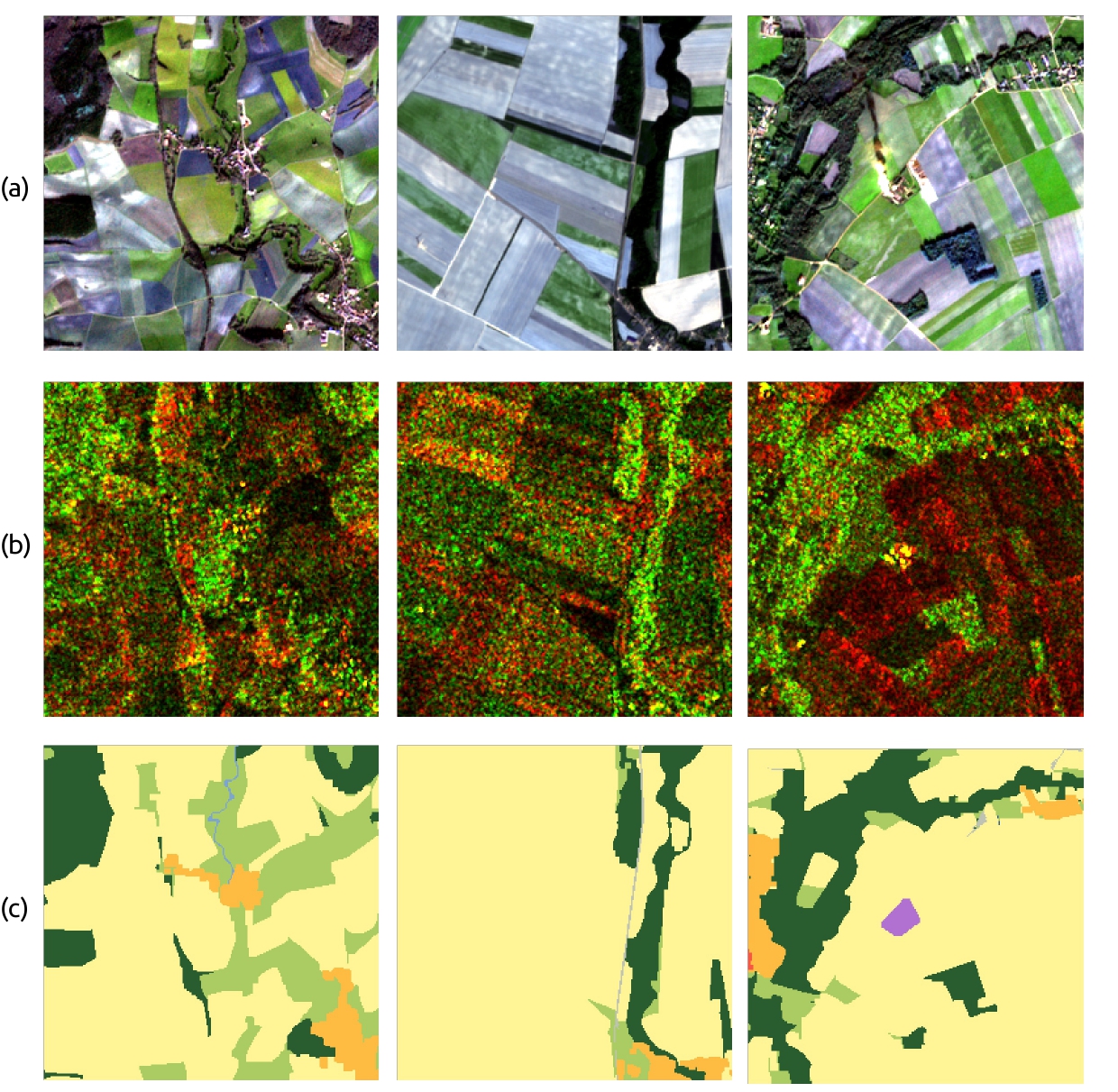

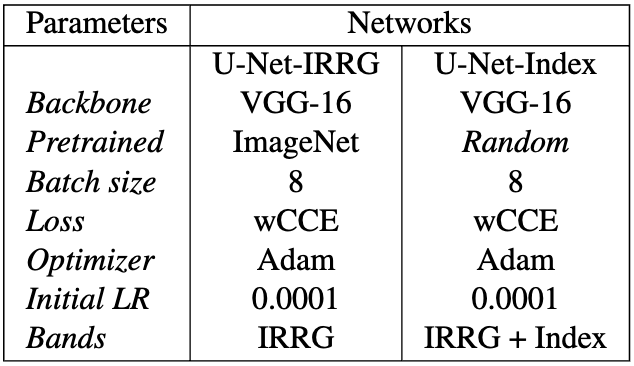

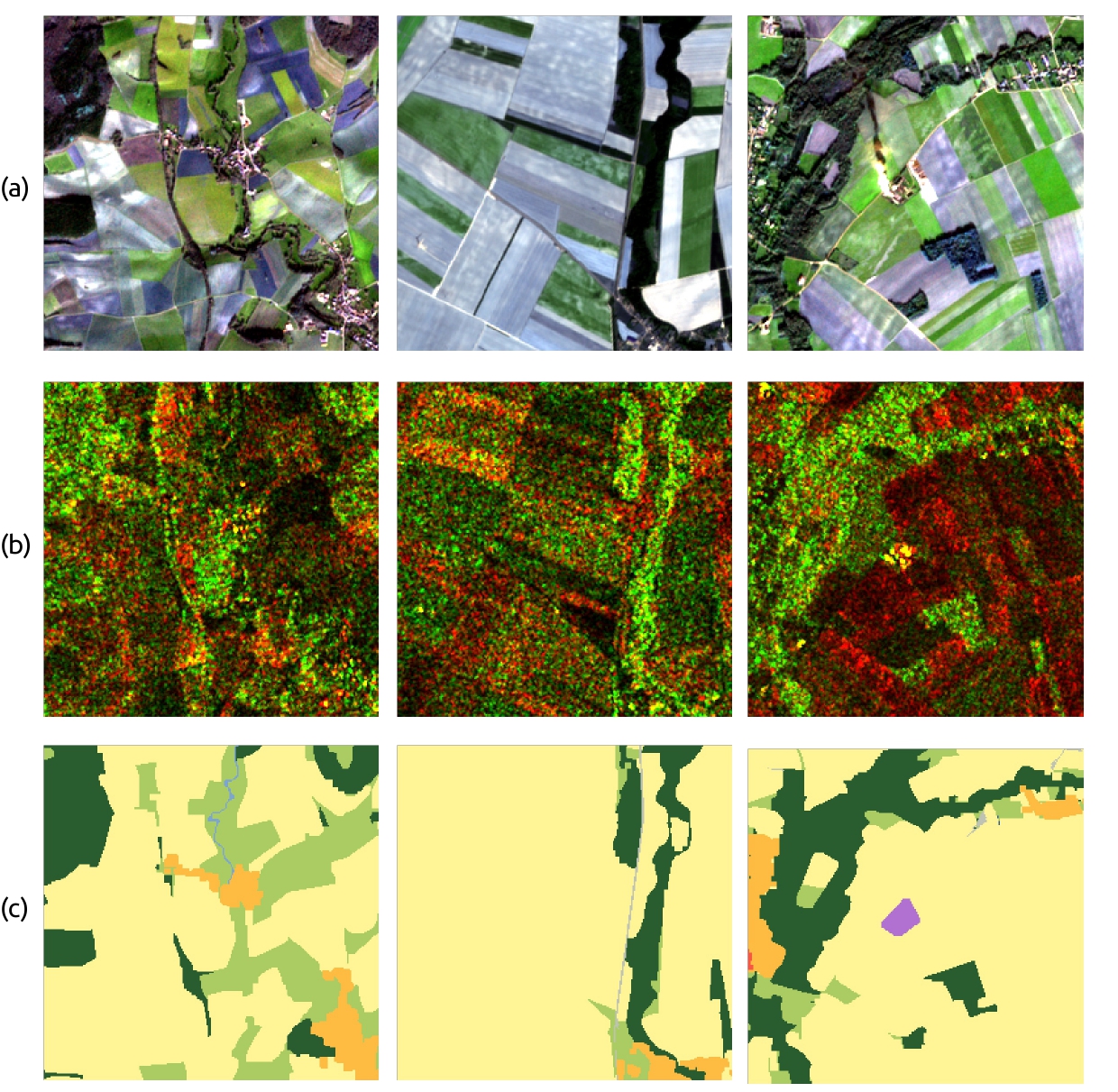

First baseline results are performed on urban areas using only single-time patches and the five urban classes of the dataset (Table 1, classes 1 to 5). First experiments focused on the Moselle department (T31UGQ). We then selected a subset on the tile for training and validation steps and a different spatially split subset for testing zone [21]. Two multiclass U-Net networks with VGG-16 as backbone are tested (Table 2). The first one uses the 3 IRRG bands (InfraRed, Red and Green) as well as weights pre-trained on ImageNet [22] and the second one uses the 3 IRRG bands as well as 3 spectral and textural indices, the NDVI (Normalized Difference Vegetation Index), the NDBI (Normalized Difference Building Index) and the entropy [23] computed on the NDVI (eNDVI). The weights of U-Net-Index have been randomly initialized. As an imbalanced dataset, a weighted categorical cross-entropy loss was used by assigning higher weights to the less represented classes [24]. This represents the inverse of the class frequency. The city of Metz (Grand Est, France) has been selected as a test area for the urban areas semantic segmentation application. Class 6 represents the aggregation of the other non-urban classes (Classes 6 to 14 in Table 1).

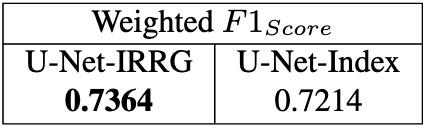

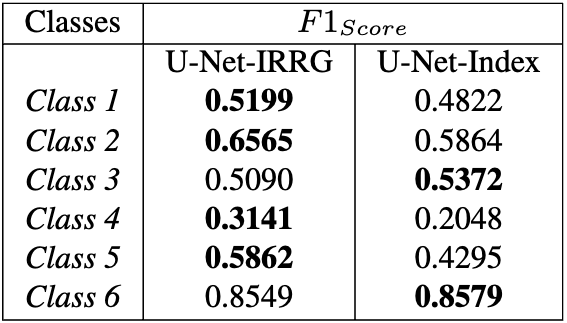

We selected 80% of patches for training and 20% for validation, outside the test area. Each network was trained for 100 epochs with Adam as the optimizer for gradient descent. Adam was prefered to SGD (Stochastic Gradient Descent) because it is more stable than the latest for semantic segmentation. Two metrics, classically used for multi-class classifications, were selected, a F1-Score weighted according to the frequency of each class within the test area in order to have an overall metric for each selected network (Table 3) and an unweighted F1-Score to have a statistical evaluation of each class (Table 4).

The statistical results show better scores for the U-Net-IRRG method compared to the U-Net-Index method with weighted F1-Score of 0.7364 and 0.7214 respectively (Table 3). Moreover, four of the six classes studied have a better F1-Score for U-Net-IRRG than U-Net-Index (Table 4). These statistical results are confirmed by the visual results presented in Figure 5. We notice a better homogeneity in the classification of all the classes on the test area for the first method while the second method offers a much more fragmented classification with an underestimation of several classes.

These baseline models are the first ones applied only on a single tile (T31UGQ). Others semantic segmentation deep learning methods using the multimodal and multitemporal dataset are a part of an ongoing PhD research.

Conclusion

This paper presents MultiSenGE, a new large scale multimodal and multitemporal benchmark dataset covering a large area in the eastern of France. It contains 8,157 triplets of 256 x 256 pixels of Sentinel-1 dual-polarimetric SAR data, Sentinel-2 multispectral level 2A images and a LULC reference database built to obtain a typopoly adapted to the 10m spatial resolution for year 2020. Moreover, the proposed 14 classes are consistent to map french or european national-scale landscape. Triplets data offer users the possibility to perform semantic segmentation and scene classification on multitemporal and multimodal data with large input patches. The baseline results have shown its ability to offer encouraging first results on urban areas classification with patches only with the single-time optical imagery. Others tests are ongoing and should improve these first results by using multitemporal and multimodal imagery offered by this dataset as well as different deep learning techniques. This benchmark dataset for LULC remote sensing applications will soon be shared with the scientific community through the Theia Data and Services center (https://www.theia-land.fr/pole-theia-2/). It also could be enriched by additionnal data as complete Sentinel-2 SITS with cloud and snow masks.

Aknowledgements

We thanks the Spatial Data Infrastructure GeoGrandEst provided the reference data used in this study and the Theia Services and Data Infrastructure for the Sentinel-2A imagery. We also would like to thanks Peps Platform for the Sentinel-1 imagery. This work is supported by French Research Agency (ANR-17-CE23-0015) and is part of a PhD project.

Referencing

[1] A. Bégué, D. Arvor, B. Bellon, J. Betbeder, D. De Abelleyra, R. P. D. Ferraz, V. Lebourgeois, C. Lelong, M. Simões, and S. R. Verón, “Remote sensing and cropping practices: A review,” Remote Sensing, vol. 10, no. 1, 2018.

[2] B. Bellón, A. Bégué, D. Lo Seen, C. A. De Almeida, and M. Simões, “A remote sensing approach for regional-scale mapping of agricultural land-use systems based on ndvi time series,” Remote Sensing, vol. 9, no. 6, 2017.

[3] N. Kussul, M. Lavreniuk, S. Skakun, and A. Shelestov, “Deep learning classification of land cover and crop types using remote sensing data,” IEEE Geoscience and Remote Sensing Letters, vol. 14, no. 5, pp. 778–782, 2017.

[4] M. Rußwurm, C. Pelletier, M. Zollner, S. Lefèvre, and M. Körner, “BreizhCrops: A time series dataset for crop type mapping,” International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences ISPRS (2020), 2020.

[5] M. A. Wulder, J. G. Masek, W. B. Cohen, T. R. Loveland, and C. E. Woodcock, “Opening the archive: How free data has enabled the science and monitoring promise of landsat,” Remote Sensing of Environment, vol. 122, pp. 2–10, 2012.

[6] P. D. Pickell, T. Hermosilla, R. J. Frazier, N. C. Coops, and M. A. Wulder, “Forest recovery trends derived from landsat time series for north american boreal forests,” International Journal of Remote Sensing, vol. 37, no. 1, pp. 138–149, 2016.

[7] J. Inglada, A. Vincent, M. Arias, B. Tardy, D. Morin, and I. Rodes, “Operational high resolution land cover map production at the country scale using satellite image time series,” Remote Sensing, vol. 9, no. 1, 2017.

[8] D. Ienco, R. Interdonato, R. Gaetano, and D. Ho Tong Minh, “Combining sentinel-1 and sentinel-2 satellite image time series for land cover mapping via a multi-source deep learning architecture,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 158, pp. 11–22, 2019.

[9] M. J. Steinhausen, P. D. Wagner, B. Narasimhan, and B. Waske, “Combining sentinel-1 and sentinel-2 data for improved land use and land cover mapping of monsoon regions,” International Journal of Applied Earth Observation and Geoinformation, vol. 73, pp. 595–604, 2018.

[10] P. Dusseux, T. Corpetti, L. Hubert-Moy, and S. Corgne, “Combined use of multi-temporal optical and radar satellite images for grassland monitoring,” Remote Sensing, vol. 6, no. 7, pp. 6163–6182, 2014.

[11] M. Mngadi, J. Odindi, K. Peerbhay, and O. Mutanga, “Examining the effectiveness of sentinel-1 and 2 imagery for commercial forest species mapping,” Geocarto International, vol. 36, no. 1, pp. 1–12, 2021.

[12] Q. Gao, M. Zribi, M. J. Escorihuela, and N. Baghdadi, “Synergetic use of sentinel-1 and sentinel-2 data for soil moisture mapping at 100 m resolution,” Sensors, vol. 17, no. 9, 2017.

[13] G. C. Iannelli and P. Gamba, “Jointly exploiting sentinel-1 and sentinel-2 for urban mapping,” IGARSS 2018 - 2018 IEEE International Geoscience and Remote Sensing Symposium, pp. 8209–8212, 2018.

[14] L. Ma, Y. Liu, X. Zhang, Y. Ye, G. Yin, and B. A. Johnson, “Deep learning in remote sensing applications: A meta-analysis and review,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 152, pp. 166–177, 2019.

[15] G. Sumbul, A. de Wall, T. Kreuziger, F. Marcelino, H. Costa, P. Benevides, M. Caetano, B. Demir, and V. Markl, “BigEarthNet-mm: A large-scale, multimodal, multilabel benchmark archive for remote sensing image classification and retrieval [software and data sets],” IEEE Geoscience and Remote Sensing Magazine, vol. 9, no. 3, pp. 174–180, Sep. 2021.

[16] M. Schmitt, L. H. Hughes, C. Qiu, and X. X. Zhu, “SEN12MS - A curated dataset of georeferenced multi-spectral sentinel-1/2 imagery for deep learning and data fusion,” CoRR, vol. abs/1906.07789, 2019.

[17] O. Hagolle, “theia_download.” https://github.com/olivierhagolle/theia_download (21 February 2020), 2021.

[18] F. Filipponi, “Sentinel-1 grd preprocessing workflow,” Proceedings, vol. 18, no. 1, 2019.

[19] T. Koleck and Centre National des Etudes Spatiales (CNES), “S1Tiling.” https://gitlab.orfeo-toolbox.org/s1-tiling/s1tiling (10 September 2021), 2021.

[20] O. Troyanskaya, M. Cantor, G. Sherlock, T. Hastie, R. Tibshirani, D. Botstein, and R. Altman, “Missing value estimation methods for dna microarrays,” Bioinformatics, vol. 17, pp. 520–525, Jul. 2001.

[21] M. Saraiva, E. Protas, M. Salgado, and C. Souza, “Automatic mapping of center pivot irrigation systems from satellite images using deep learning,” Remote Sensing, vol. 12, no. 3, 2020.

[22] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 ieee conference on computer vision and pattern recognition, 2009, pp. 248–255.

[23] R. M. Haralick, I. Dinstein, and K. Shanmugam, “Textural Features for Image Classification,” IEEE Transactions on Systems, Man and Cybernetics, vols. SMC-3, no. 6, pp. 610–621, 1973.

[24] N. Audebert, B. Le Saux, and S. Lefèvre, “Beyond RGB: Very High Resolution Urban Remote Sensing With Multimodal Deep Networks,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 140, pp. 20–32, 2018.